As a professional in the field of artificial intelligence, I have seen firsthand the rapid advancements in deep learning and machine learning technologies. While these advancements have the potential to revolutionize industries and improve our daily lives, they also raise important ethical questions that must be addressed.

Table of Contents

In this article, I will explore the ethical implications of deep learning and artificial intelligence, including the concept of black box machine learning, the importance of explainable AI, DeepMind’s limits on large language models, and the need for transparency and accountability in AI.

Introduction to Deep Learning and Artificial Intelligence

Before diving into the ethical implications of deep learning, it is important to have a basic understanding of what it is and how it works. Deep learning is a type of machine learning that uses artificial neural networks to simulate the way the human brain works. This enables the system to learn from large amounts of data, without being explicitly programmed.

Artificial intelligence, on the other hand, refers to the ability of machines to perform tasks that would normally require human intelligence, such as visual perception, speech recognition, decision-making, and language translation. AI is powered by deep learning and other machine learning techniques, and has the potential to transform virtually every industry, from healthcare and finance to transportation and entertainment.

Understanding the Concept of Black Box Machine Learning

One of the biggest challenges with deep learning is the concept of black box machine learning. This refers to the fact that deep learning algorithms are often difficult to interpret and understand, making it difficult to determine how the system arrived at a particular decision or recommendation. This lack of transparency can be problematic, as it can lead to bias, discrimination, and errors.

For example, in the criminal justice system, algorithms are being used to predict the likelihood of recidivism, or the likelihood that a defendant will reoffend. However, these algorithms are often based on biased data, which can perpetuate systemic racism and discrimination. Furthermore, because the algorithms are often black boxes, it is difficult to determine how they arrived at their predictions, making it difficult to challenge their accuracy or fairness.

The Ethical Implications of Black Box Machine Learning

The ethical implications of black box machine learning are numerous, and can have significant consequences for individuals and society as a whole. One of the biggest concerns is the potential for bias and discrimination, as mentioned above. If the data used to train the algorithm is biased, the algorithm itself will be biased, leading to unfair outcomes for certain groups of people.

Another concern is the lack of transparency and accountability. If we do not know how an algorithm arrived at a particular decision, it is difficult to challenge its accuracy or fairness. This lack of transparency can also make it more difficult to identify and correct errors or biases in the system.

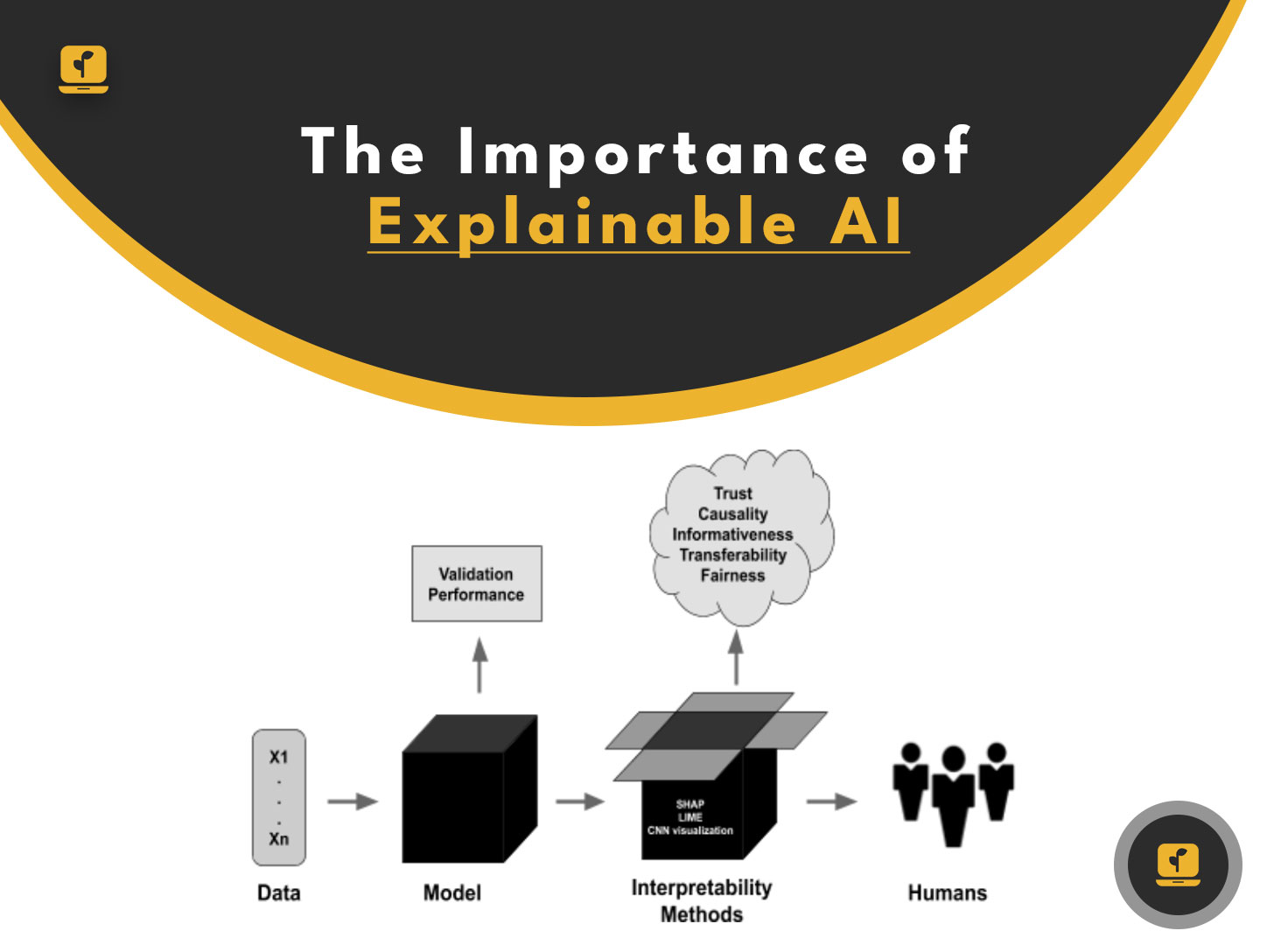

The Importance of Explainable AI

In order to address the ethical implications of black box machine learning, it is important to develop explainable AI. Explainable AI refers to the ability of an algorithm to explain how it arrived at a particular decision or recommendation. This can help to increase transparency and accountability, and can also help to identify and correct errors or biases.

One approach to explainable AI is to use techniques such as decision trees or rule-based systems, which are more transparent and easier to understand than neural networks. Another approach is to use techniques such as counterfactual explanations, which show how changing certain input variables would have affected the output of the algorithm.

DeepMind’s Limits on Large Language Models

Recently, DeepMind, a leading AI research lab, announced that it would be placing limits on its use of large language models. These models are used to generate natural language text, such as chatbots or language translation systems. However, they are often difficult to interpret and can perpetuate biases and errors.

The limits imposed by DeepMind include restrictions on the size and scope of language models, as well as increased transparency and accountability measures. While this move has been praised by some as a step in the right direction, others have criticized it as being too little, too late.

The Controversy Surrounding DeepMind’s Limits on AI Language Systems

The controversy surrounding DeepMind’s limits on AI language systems highlights the importance of transparency and accountability in the development and implementation of AI. Some critics argue that the limits do not go far enough, and that more needs to be done to address the ethical implications of AI.

Others argue that the limits could have unintended consequences, such as stifling innovation or hindering the development of useful AI technologies. Ultimately, the debate underscores the need for a balanced approach to AI development, one that takes into account both the potential benefits and risks.

The Need for Transparency and Accountability in AI

Regardless of the controversy surrounding DeepMind’s limits on AI language systems, it is clear that transparency and accountability are essential when it comes to AI. As AI becomes more pervasive in our daily lives, it is important that we have a clear understanding of how it works and how it makes decisions.

This requires not only increased transparency and accountability on the part of developers and businesses, but also increased education and awareness among the general public. Only by working together can we ensure that AI is developed and implemented in an ethical and responsible manner.

Steps Being Taken to Address Ethical Issues with AI

Fortunately, there are a number of steps being taken to address the ethical issues with AI. For example, organizations such as the Partnership on AI are working to develop ethical guidelines and best practices for AI development and implementation.

Governments around the world are also beginning to take action, with some countries implementing regulations and oversight measures to ensure that AI is developed and used in a responsible manner. Furthermore, there is a growing movement among AI researchers and practitioners to prioritize ethical considerations in their work.

Ethical Considerations for Businesses and Developers Implementing AI

For businesses and developers implementing AI, there are a number of ethical considerations that should be taken into account. These include ensuring that the data used to train the algorithm is unbiased and representative of the population, increasing transparency and accountability in the system, and prioritizing the safety and well-being of individuals.

Furthermore, businesses and developers should be prepared to address any unintended consequences or negative impacts that may arise from the implementation of AI. This requires ongoing monitoring and evaluation of the system, as well as a willingness to adapt and change as needed.

Conclusion: Balancing the Benefits and Risks of AI

In conclusion, the ethical implications of deep learning and artificial intelligence are significant, and must be addressed in order to ensure that these technologies are developed and implemented in an ethical and responsible manner. While there are certainly risks and challenges associated with AI, there are also significant benefits, including improved efficiency, accuracy, and quality of life.

Ultimately, the key is to strike a balance between the benefits and risks of AI, and to prioritize ethical considerations every step of the way. By working together, we can ensure that AI is used to benefit society as a whole, while minimizing the potential for harm or unintended consequences.