Two competing technologies promise to eliminate stuttering and tearing in video games.

You can’t go back once you’ve used a variable refresh rate monitor. AMD’s FreeSync and Nvidia’s G-Sync technologies offer a buttery-smooth gaming experience devoid of stuttering, screen tearing, and input lag comparable to V-Sync. Both ecosystems, however, have pluses and minuses.

Now that adaptive sync monitors have been out for a few years, it’s time to weigh in on FreeSync vs. G-Sync once more. Here you’ll find all you should know about AMD and Nvidia’s gaming monitors.

What is adaptive sync, also known as variable refresh rate?

Let’s take a look at the adaptive sync, or variable refresh rate, technology that underpins both FreeSync and G-Sync before we get into the differences between the two.

Traditional monitors refresh their image at a predetermined pace—a 60Hz monitor, for example, refreshes every 1/60th of a second. Your graphics card transmits images to your screen as quickly as possible, but traditional monitors refresh their image at a defined rate. When your graphics card fails to deliver frames on time, screen tearing occurs because your monitor displays a portion of one frame and the next frame onscreen simultaneously.

It appears as though the image is attempting to split in two and fly in opposite directions, and it only gets worse as your game’s frame rate becomes more dynamic. It’s unattractive. Embarrassingly unattractive.

The VSync feature on your graphics card helps, but it comes with its drawbacks: stuttering and slow input lag because the technology instructs your graphics card to wait for a new frame until the display is ready.

By syncing the refresh rate of your display with the refresh rate of your graphics card (up to the monitor’s maximum refresh rate), FreeSync and G-Sync eliminate all of these issues. Simple as your video card sends out a new frame, the adaptive sync monitor shows it. Your monitor refreshes at 52Hz if your graphics card generates 52 frames per second. What’s the ultimate result? The gameplay is relatively smooth.

Implementation of FreeSync vs. G-Sync

AMD and Nvidia take two entirely different approaches when it comes to adaptive sync technology.

The VESA Adaptive-Sync standard, part of DisplayPort 1.2a, is used by FreeSync. AMD doesn’t collect royalties or licensing fees, and it’s compatible with off-the-shelf display scalers that monitor manufacturers may employ.

Incorporating FreeSync into a display comes at a low cost. As a result of this openness, and from low-cost entry-level displays to high-end gaming displays, FreeSync is available on many monitors.

Nvidia’s proprietary hardware module empowers G-Sync monitors.

The G-Sync requirements are substantially more stringent. The technology necessitates the use of a proprietary hardware module by display manufacturers, and Nvidia maintains a tight grip on quality control, collaborating with manufacturers on everything from panel selection through display development to final certification.

G-Sync monitors often start at a higher price point because it’s a premium add-on for high-end gaming displays, so that’s a significant price increase. As a result, G-Sync panels are often rarely found in conjunction with low-cost or popular gaming PCs—though, with G-Sync, you always know what you’re getting.

Pros and cons of AMD FreeSync

The key benefit of FreeSync is its openness and inexpensive implementation cost.

Because of the lower barrier to entry, AMD’s adaptive sync technology can be found in displays as little as $130, allowing even budget gamers to take advantage of FreeSync’s benefits. G-Sync can’t hold a candle to this. The 27-inch Lenovo 65BEGCC1US, which is now on sale for $330 on Newegg, is the cheapest G-Sync monitor presently available, yet the vast majority of G-Sync displays cost far over $500. There are 154 distinct monitors under $500 on Newegg’s FreeSync listings.

AMD has a huge edge in that it is truly inexpensive. These items are indeed available for purchase by ordinary people.

A screenshot of the AMD FreeSync monitor listings on Newegg.

FreeSync’s openness has aided its widespread adoption. Newegg has more than three times the FreeSync displays as G-Sync panels. (However, according to Nvidia, there are more than 120 G-Sync displays and laptops available.)

The openness of FreeSync has certain downsides. When opposed to purchasing a G-Sync display, shopping for a FreeSync monitor is a pain in the neck.

Adaptive sync is only available on FreeSync displays in a narrow frame-rate range, such as 48Hz to 75Hz, on many low-cost models. Every monitor has a diverse range of capabilities, and some are somewhat limited. Fortunately, they’re all included under the “monitors” portion of the table at the bottom of AMD’s webpage.

On AMD’s FreeSync page, you can see which adaptive sync refresh-rate ranges are supported, as well as if FreeSync works with DisplayPort or HDMI.

Because of the lax standards, you’ll have to keep a careful check on the monitor’s features. For example, AMD added a feature to FreeSync called Low Framerate Compensation (LFC) that improves how FreeSync displays behave when the minimum supported refresh rate is exceeded (48Hz, in the last example).

When refresh rates fall below the FreeSync minimum, monitors with LFC duplicate frames, allowing the refresh rate to reach the FreeSync range. If your graphics card outputs 30 frames per second, LFC repeats the frames and runs the display at 60 frames per second to keep things smooth. It’s fantastic!

It’s also optional and only found in expensive panels. Moving into and out of the FreeSync range without LFC is unsettling since you’ll go from silky-smooth gaming to stuttering or screen tearing in a matter of seconds. To obtain the best FreeSync experience, you’ll need to research.

Instead of the standard DisplayPort requirement, some displays allow you to use HDMI connections with FreeSync.

Another benefit of FreeSync is its connection. Because FreeSync monitors employ a standard display scaler, they usually offer a wide range of ports. DisplayPort and HDMI are the only options for G-Sync screens.

While both FreeSync and G-Sync were initially only available via DisplayPort, AMD has now added FreeSync to HDMI, allowing the technology to get utilized on even more monitors.

It increases FreeSync’s adaptability, but it’s still another factor to consider before purchasing. Along with the aforementioned FreeSync ranges, AMD’s website lists display compatibility.

Pros and cons of Nvidia G-Sync

The primary benefit of G-Sync is that it provides a consistent, high-quality gaming experience.

Every G-Sync display must pass Nvidia’s rigorous certification procedure, which has previously rejected displays. Nvidia hasn’t made the requirements public. But according to reps, the company works directly with panel manufacturers like AU Optronics to optimize flicker properties, refresh rates, response times, and visual quality. It works with display manufacturers like Asus and Acer to fine-tune onscreen display and more. All displays get calibrated using the sRGB colour gamut.

Every G-Sync monitor has AMD’s Low Framerate Compensation, ensuring a smooth gaming experience. “Frequency dependant variable overdrive” is likewise supported by all G-Sync displays. Without too much detail, the technology prevents ghosting on G-Sync displays, which was a problem with early FreeSync panels but is now less common.

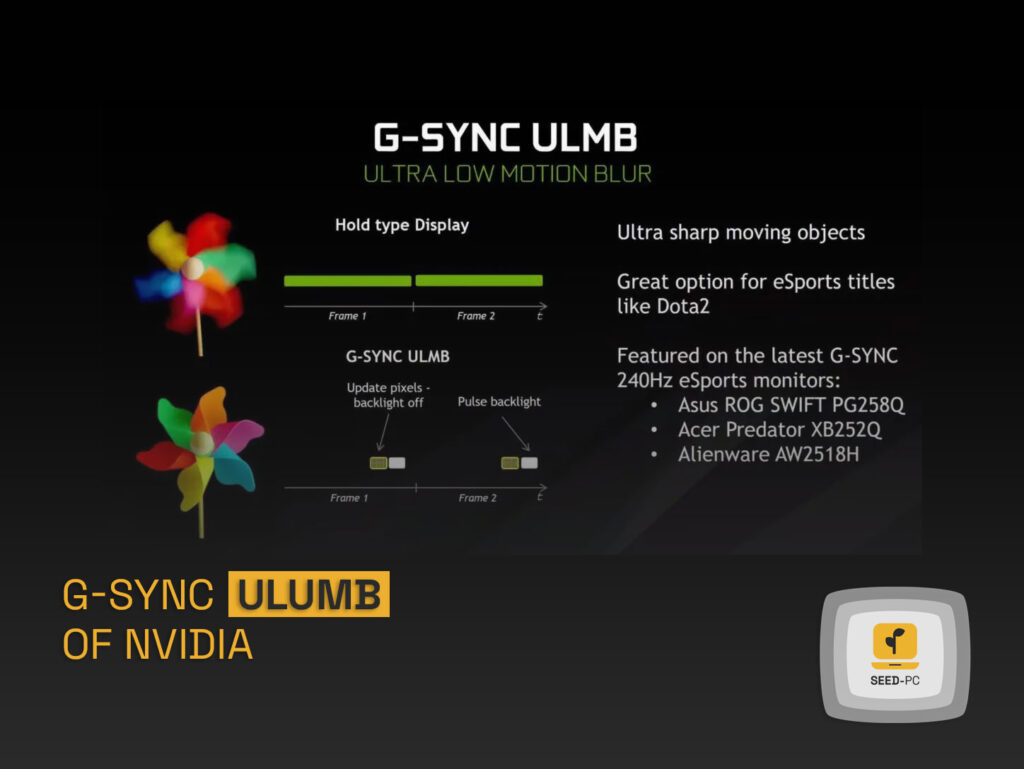

how does G-Sync ULMB work?

While G-Sync started with an emphasis on adaptive sync, Nvidia claims it has grown to include “the best gaming displays.“

Extra features on some G-Sync panels include refresh rate overclocking and Ultra Low Motion Blur. It protects text and other components from blurring at very high refresh rates. For e-sports games, it’s a game-changing feature.

Early ULMB displays were infamous for being dark, but new 240Hz G-Sync monitors from Asus, Acer, and Alienware pulse their backlight at a scorching 400 nits of brightness, putting an end to that problem. However, you can’t utilize G-Sync adaptive sync and ULMB simultaneously.

In terms of drawbacks, the restricted port selection may be an issue in some situations, but G-Sync’s major flaw is its cost. G-Sync is a premium gaming experience available only on high-end monitors.

Although you get what you pay for, adaptive sync works effectively with inexpensive graphics cards that struggle to hit 60 frames per second. The majority of GeForce GTX 1050 owners will never see it. Due to the expensive cost of G-Sync displays, Nvidia’s technology is only available to high-end consumers.

Graphics cards: FreeSync vs. G-Sync

The major drawback is that neither AMD nor Nvidia’s adaptive sync technology works with each other’s graphics cards. G-Sync monitors are required for GeForce graphics cards, whereas Radeon graphics cards can only utilize FreeSync screens.

You’ll need to have a GeForce GTX 600-series (or newer) graphics card to use G-Sync. A Radeon Rx 200-series (or later) graphics card is required for FreeSync, while specific particular models aren’t supported.

If you’re running a Radeon that predates the RX 400-series, you’ll need to do some research to make sure it’s compatible. Go to AMD’s FreeSync page and click the “Products” tab on the chart at the bottom to view a complete list of supported graphics cards.

[For further information, see the best graphics cards for PC gaming]

Laptops: G-Sync Vs. FreeSync

Almost every gaming laptop maker has a G-Sync-equipped laptop on the market. Earlier models could only show at 75Hz, while modern laptops can be 120Hz.

In the mobile sector, where Nvidia GPUs are far more common, FreeSync usually gets disregarded. Still, the $1500 Asus ROG Strix GL702ZC—the first-ever AMD Ryzen laptop—includes Radeon graphics and a FreeSync display. HP also released an Omen 17 gaming laptop with a Radeon RX 580 graphics card and FreeSync ($1,200 at Best Buy).

G-Sync HDR vs. FreeSync 2: What’s Next?

With FreeSync 2 and G-Sync HDR, AMD and Nvidia are ushering in a new era of gaming monitors, now that ultra-high-resolution displays are becoming more common, and high dynamic-range PC monitors have finally arrived.

Both are HDR-optimized and, for the time being, will most likely be limited to high-end displays. For a more in-depth look at each, click on the links below, but here are the highlights.

FreeSync 2 abandons FreeSync’s openness favoring a more tightly regulated environment. Monitors must support Minimal Framerate Compensation (LFC) to receive the FreeSync 2 certification. AMD certifies screens for low latency and a dynamic color and brightness range that is twice as vibrant as ordinary sRGB displays.

The FreeSync 2 API tells a game about your HDR monitor’s native features, allowing the software to immediately match your screen’s qualities, resulting in the highest possible visual quality and zero lag.

Huzzah! When you start a game that supports it, FreeSync 2 automatically switches to “FreeSync mode,” increasing the brightness and colour gamut, so you don’t have to keep your visual settings at eye-searing levels while you’re cruising around the Windows desktop.

Samsung’s crazy 49-inch ultrawide monster was one of the first FreeSync 2 monitors.

Samsung’s curved 32-inch CHG70 ($700 on Amazon) and the colossal 49-inch, curved CHG90 ($1,416 on Amazon) were the first FreeSync 2 monitors to enter the market in 2017. We tested the CHG70 and found it to be a fantastic display, even though HDR on Windows still needs improvement. They released a few more monitors, and Microsoft’s Xbox One X console now supports FreeSync 2.

Samsung also released G-Sync HDR monitors with 4K 144Hz and ultrawide 200Hz resolutions, and they appear to be the holy grail of PC displays. Everyone has 1,000 nits of brightness and hundreds of backlight zones, as well as ultra-fast refresh rates, AdobeRGB color gamut coverage, and quantum dot technology.

Nvidia hasn’t released the formal specifications for G-Sync HDR displays, which need a different, next-gen version of Nvidia’s proprietary hardware module than standard G-Sync displays. Still, representatives claim that every G-Sync HDR display will look as good as these early versions.

After a long wait, the Acer Predator X27 and Asus ROG Swift PG27UQ, the first 4K G-Sync HDR screens, are finally available. Each one costs $2,000 each.

The cutting-edge isn’t cheap, but as seen in our Acer Predator X27 review, these panels’ speed and superb visual quality are unrivalled. G-Sync HDR seems like it pushes state-of-the-art forward significantly.